Using applications without security configuration is preferable for local development environments (in non-production environments, we still want to use authentication to keep environments similar), but companies require strict security parameters. In this post, we will consider available options for authentication in Confluent Schema Registry.

In the source code, there is a special CachedSchemaRegistryClient class that is reused by other libraries for interacting with the Schema Registry. Its responsibilities include caching the schema ID from the Schema Registry server to add to the Kafka producer event. Kafka producers and consumers don’t send or consume events with schema data; only the schema ID is used. Additionally, this class uses the SslFactory class.

SslFactory sslFactory = new SslFactory(sslConfigs);

for configuring the restService, which is reused by other libraries for communication with Confluent Schema Registry. The SslFactory, along with other functionalities, also supports two important authentication mechanisms: mTLS, using:

- JKS files

- PEM files

Support for PEM files for authentication appears to be more flexible and easier to use than JKS files. This functionality was added by this PR – https://github.com/confluentinc/schema-registry/issues/2063 (find more details in this article – https://medium.com/expedia-group-tech/kafka-schema-registry-pem-authentication-bb434f32f99f). Additionally, if you have already used one of the authentication types like PEM for Kafka brokers, it is more suitable to reuse the existing authentication mechanism for Schema Registry.

Also, there are classes under the io.confluent.kafka.schemaregistry.client.security package:

SaslBasicAuthCredentialProviderStaticTokenCredentialProviderBasicAuthCredentialProvider

These classes can be investigated for authentication using basic authentication (RFC 7235) or bearer token-based authentication. The implementation java.util.ServiceLoader allows dynamically loading required providers, including custom implementations.

You will find that the commercial version has rich security features out of the box.

https://docs.confluent.io/platform/current/security/basic-auth.html#basic-auth-sr

https://docs.confluent.io/platform/current/kafka/configure-mds/ldap-auth-mds.html#ldap-auth-mds

including additional benefits such as various security features. For instance, when there is a special MDS service (Schema Registry RBAC) allowing granular configuration of RBAC permissions.

Refer also to the ACL authorizer for more details (Schema Registry ACL Authorization).

Using JAAS in Schema Registry enables support for different types of authentication methods.

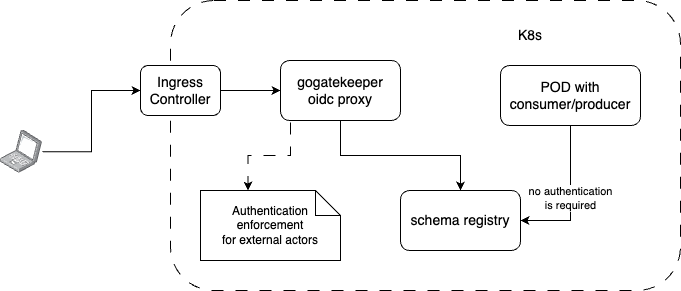

Under some circumstances, you may find it helpful to use architectures where existing services in Kubernetes still don’t use authentication with Schema Registry, but external customers of Kubernetes (developers who manipulate schemas) are required to be authenticated. Alternatively, you may want, in the first iteration, to enable authentication for developers only from outside the Kubernetes cluster to mitigate the risks of accidental schema modification. This allows services to continue working with Schema Registry without authentication because enabling authentication would prevent consumers and producers from retrieving Avro schemas. You would need to do this simultaneously for all services without downtime.

With this approach, it is still possible to gain direct access to Schema Registry inside Kubernetes through services or directly to pods.

Moreover, it may be more advantageous to use a GitOps approach with Avro schemas using this command-line utility -“ schema-registry-gitops. With this in mind, we have:

- A Git repository with Avro schemes separated between different teams, domains, or services by directories.

- Continuous Integration (CI) that builds Avro schemes and pushes the resultant file (JAR for Java) to artifact repositories like Nexus.

This approach allows us to manage Avro schemes at scale. Producers and consumers keep a cached version of the schema, but they won’t work without the Schema Registry. According to their documentation, they at least support TLS authentication.

To summarize the theory, you may find this GitHub repository useful for your authentication-specific experiments. It consists of:

- Zookeeper

- Kafka

- Confluent Schema Registry

- Java producer test applications that use Schema Registry with authentication

To get started with the example code, follow these steps:

1.

git clone https://github.com/kostiapl/schema-registry-auth.git

cd schema-registry-auth && docker compose -f common.yml -f zookeeper.yml up

2.

wait until zookeeper is started and then run in another terminal window of Visual Code:

docker compose -f common.yml -f kafka.yml up

this will start Kafka broker along with Confluent schema registry

3.

Open the avro-test-producer project in your preferred IDE. Execute package lifecycle (this will generate Java code from Customer-v1.avsc, and then you can run and debug the code. Kafka docker ports are exposed through localhost port 19092 that allows you to connect to 19092 from your code to the docker container. Corresponding Java code configuration

properties.setProperty("bootstrap.servers", "127.0.0.1:19092");

// confluent schema registry port

properties.setProperty("schema.registry.url", "http://127.0.0.1:8081");

but before you can do that, you need to create a new schema using curl command as shown below

jq '. | {schema: tojson}' ./test-avro.avsc | \

curl -X POST http://localhost:8081/subjects/test-avro/versions/ \

-H "Content-Type: application/json" \

-d @-

4.

Create a new topic by using the command

docker compose -f common.yml -f init-topics.yml up

To enforce authentication to Confluent Schema Registry, use the following configuration options:

1.

env variables in Docker compose file (we enable basic authentication)

SCHEMA_REGISTRY_AUTHENTICATION_METHOD: BASIC

SCHEMA_REGISTRY_AUTHENTICATION_REALM: SchemaRegistry

SCHEMA_REGISTRY_AUTHENTICATION_ROLES: Testers

SCHEMA_REGISTRY_OPTS: -Djava.security.auth.login.config=/etc/schema-registry/jaas_config.conf

2.

jaas_config.conf configuration file with the content like the following

SchemaRegistry {

org.eclipse.jetty.jaas.spi.PropertyFileLoginModule required

file="/etc/schema-registry/login.properties"

debug="true";

};

where login.properties may contain login, secret and roles for BASIC authentication

ckp_tester:test_secret,Testers

References

https://docs.rundeck.com/docs/administration/security/authentication.html

https://github.com/confluentinc/confluent-kafka-python/tree/master